(10-October-22)

Let’s start off with a true confession. I have GAS. Gear Acquisition Syndrome. I like to get new stuff and try it out. However, neither of these items are particularly new. So, why get the iPhone 14 Pro and the Apple Watch 8 now? Let’s take them in parts.

Back when the pandemic first started, I had an iPhone 10 (or is it X, I’m still not sure what I am supposed to say). Unfortunately something happened with it’s near field communications and I needed that. Not “I really want to have it” but “I have health related devices that use it, so I need it fixed.” Because COVID, I couldn’t get good help from Apple, the phone support sent me to the store, and the store sent me to phone support. You know the basic support circle-j***. I threw up my hands and got a Google Pixel 5 as a replacement.

Now, I like the Pixel 5. Fine phone with a really good camera. I think the Android platform lacks some of the fit and finish of Apple’s IOS, but nothing that was a real deal breaker for me. If it wasn’t for a couple of things I would have been content staying with Android. In fact, the transition from Apple to Android was much easier than the transition back. More on that in a moment.

What sold me on going back to Apple in general and the iPhone 14 Pro in particular were the emergency communications tools and the camera. As a ham radio operator I probably understand the limitations of wireless better than most people, but even then I have been let down by all the carriers while road tripping in places like South Georgia or the Blue Ridge Mountains. While I always seem to find a way in an emergency, I don’t like knowing I might go hours without coverage. The emergency messaging via satellite will help me fill in the gaps and give me peace of mind when I am on the road or in the mountains and that’s a huge value to me.

The other item I mentioned is photography, and I like to take pictures and videos so all types of changes in those areas get my attention. As I mentioned I am big on travel and one of the things I have been trying to do is reduce my load. When I go to the mountains for pictures I typically take a DSLR with tripods and computers to back up SD cards and it’s a lot of stuff. I felt that with the new camera – 48 Megapixels, lots of shooting modes and options, plus a much smaller footprint – I could break free of my DSLR. With a trip to England coming up, hitting 8 areas in 10 days, I wanted to keep my load low and this will help. The picture quality is very good versus the Pixel 5, not that the P5 is bad at all. See my first impressions blog post for a bakeoff. This article on PetaPixel gets into the upgrade benefits.

So, now you know why I made the switch. The how was painful, but it’s one time pain. Some brief takeaways:

– With the switch TO Android there was a nice tool to make the migration with a custom cable that connected the 2 devices. No cable here and I couldn’t even get the phones to talk to each other despite an app to promised to do that very thing.

– My wireless provider is AT&T Prepaid and they were not prepared to handle this type of conversion. The iPhone 14 Pro only uses and eSim while the Pixel 5 uses a physical one. I was without service for about 6 hours while I was sent from store to phone and almost back to store before a manager in Chat support saved me. I hope my experience became a support article so others don’t go through that pain.

Let’s talk a little about the watch. I had an Apple Watch 3 and it was fine. I didn’t feel like it was a critical device for me, and actually handed it down to a family member because I am more of a fan of mechanical watches. I did try a couple of Android watches, one from Samsung and one inexpensive knockoff. I wasn’t impressed and didn’t really integrate them into my lifestyle.

In the gap of 5 versions however, Apple has focused more on health apps and I have become more focused on my health. It was time to give the watch another try. A few week after getting the phone I went to West Farms Mall outside of Hartford and shopped the Apple store. My biggest question was, did I want to go with the Apple Watch Ultra or the Series 8. As much as I have that GAS I admitted earlier, I couldn’t bring myself to spend the extra $300 on the Ultra watch. First, I didn’t like the size. While I am OK with a big watch, that particular one just seemed very thick. Second, I didn’t need cellular connectivity on my watch. I don’t get separated from my phone that often that I need additional access, and I don’t want to pay the monthly vig for the privilege. Now in fairness, I don’t know if cellular activation is required, but it’s on more thing to break. So, I went with the base Series 8.

So far I am really pleased with all the integrations on the Series 8. Sleep tracking, exercise apps, health apps, controlling podcasts from the phone in my pocket, all good things so far. I also like the batter life. I charge it while in the shower and it runs most of the day without issues. Some nice watch faces too with different complications. That’s an area I want to explore more as I go.

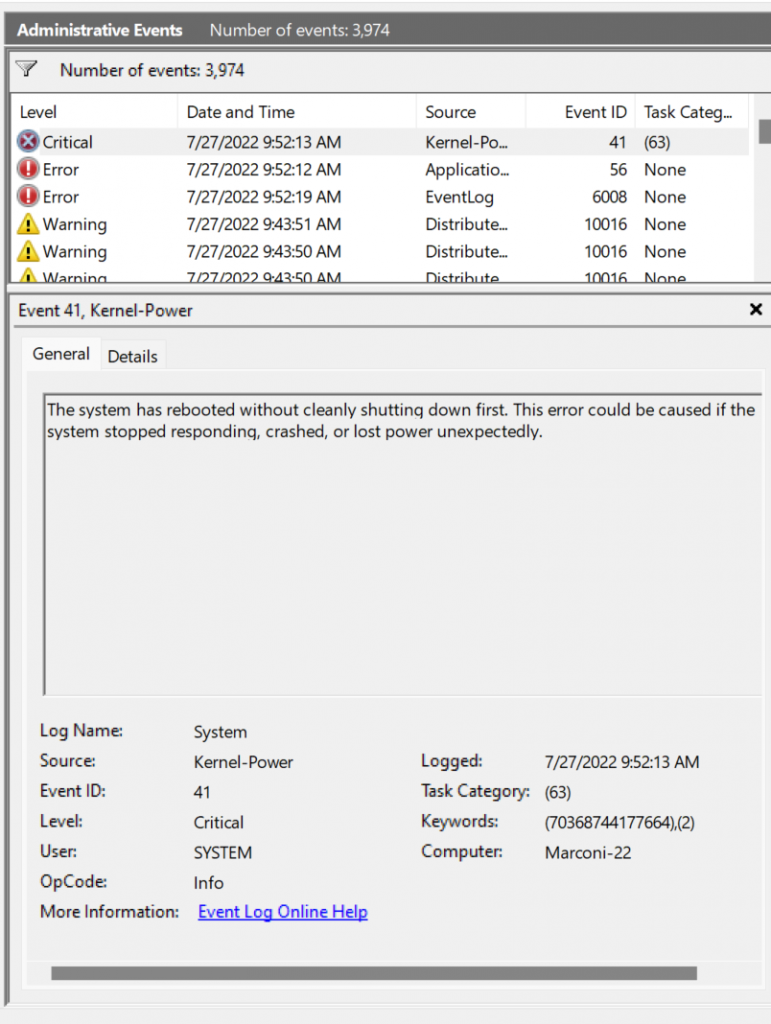

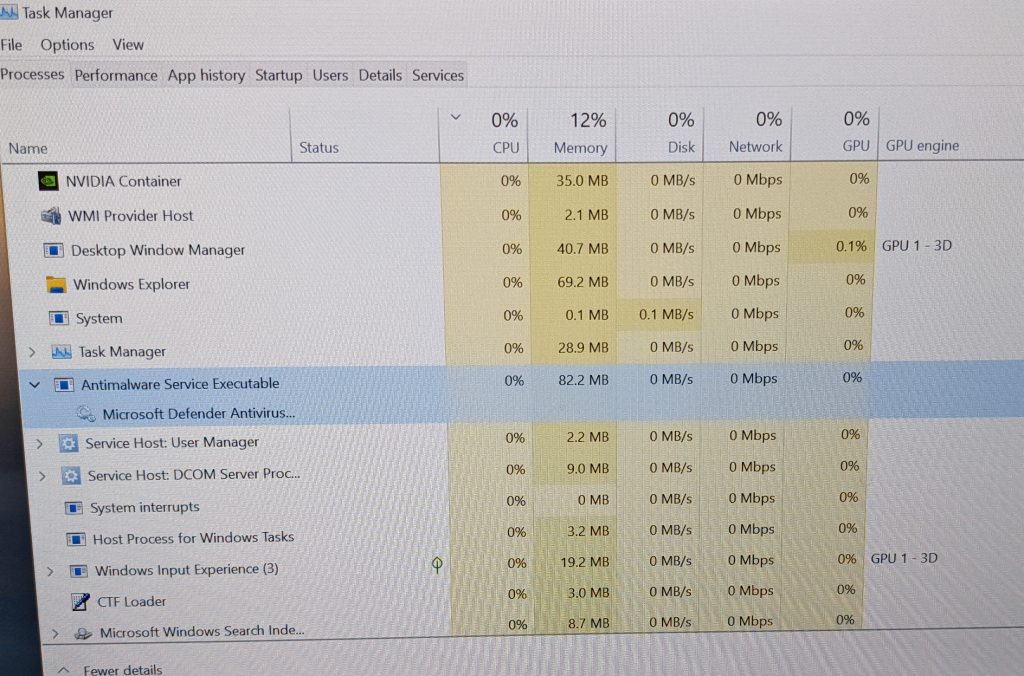

So, outside of the computer (a custom built Windows PC with a bug that is fading) I am all in on Apple again. I’m not feeling like an Apple fanboy, just a user. One of the biggest lessons for me over the last year or so is that you may as well shop for the features you want and just be prepared to put in the time to fight with support, because no company these days is looking to have world class support.

The iPhone and watch are headed out on their first long road trip. I’ll update on performance if there is something significant to share. Thanks for reading and if you have any thoughts on this, please send me a tweet to @N4BFR on Twitter and help with the conversation.